(Source: wikipedia.com)

Investigators

Truyen Tran (Australia) Shannon Ryan (Australia)

Hung Le (Australia)

Hieu-Chi Dam (Japan)

Alejandro Franco (France)

Cuong Dang (Singapore)

Thang Phan (Vietnam)

Members

Kien Do

Phuoc Nguyen

Dung Nguyen

Kha Pham

Tri Nguyen

Sherif Tawfik

Thang Nguyen

Dat Ho

Linh La

Alumni

Adam Beykikhoshk

Shivapratap Gopakumar

Vuong Le

Tu Nguyen

Trang Pham

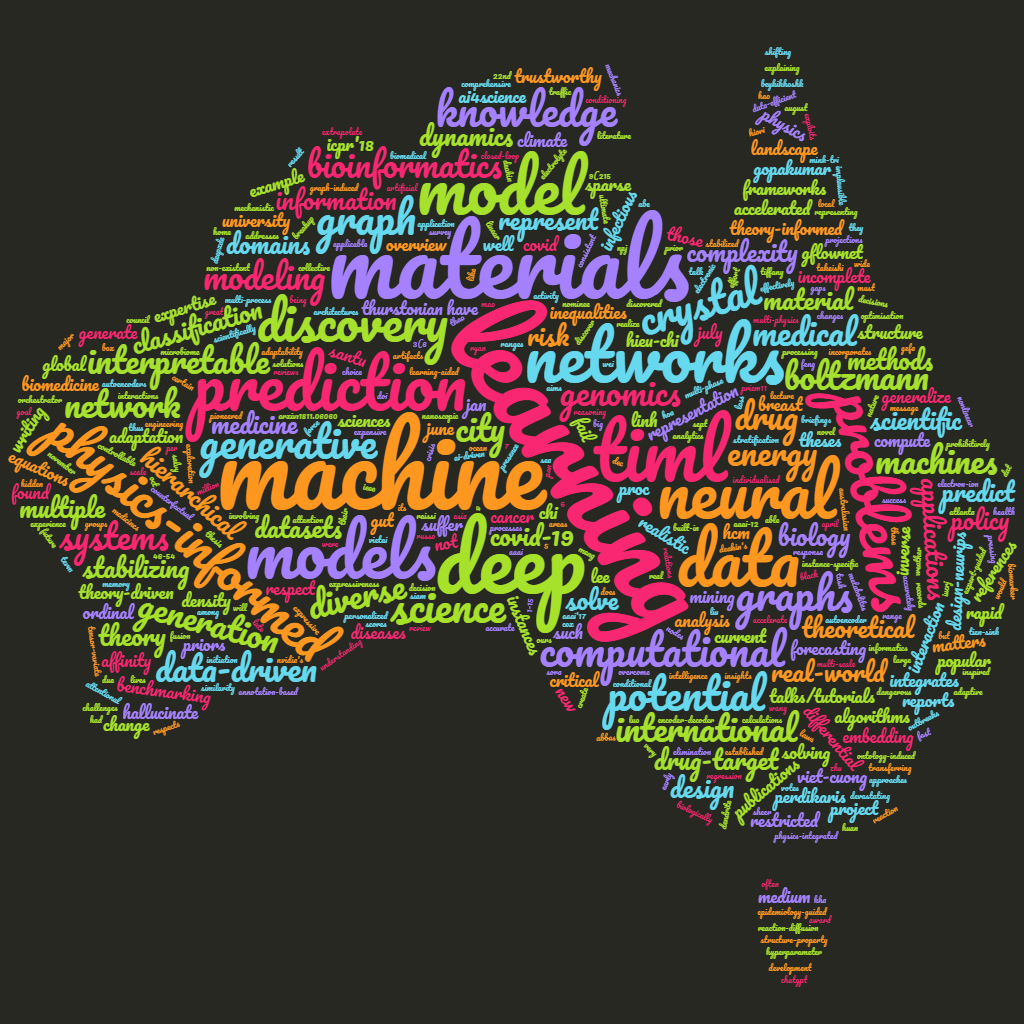

Theory-informed Machine Learning

Aims:

Our goal is to develop more generally applicable and trustworthy TiML

models. We will create

new TiML algorithms that are:

|  |

Areas:

| Content: |

Current leading data-driven learning systems such as ChatGPT, Gemini, and Sora often hallucinate non-existent, sometimes dangerous artifacts and generate scientifically implausible outcomes when applied to realistic science and engineering settings. Lacking built-in mechanisms to understand real-world phenomena, they fail to generalize beyond their trained ranges. In contrast, theory-driven models respect the underlying laws and extrapolate well but may suffer from being simplistic, incomplete, and prohibitively expensive to solve, thus unable to accurately represent the dynamics and complexity of the real world. For example, a detailed theory-driven model of weather would fail to compute forecasts in real-time due to the sheer complexity of modeling the atmosphere, ocean, land, and the interactions among them. Theory-informed Machine Learning (TiML) integrates the strengths of both approaches, showing great promise for providing trustworthy insights to help solve pressing global and local problems like infectious diseases, energy security, and climate change.

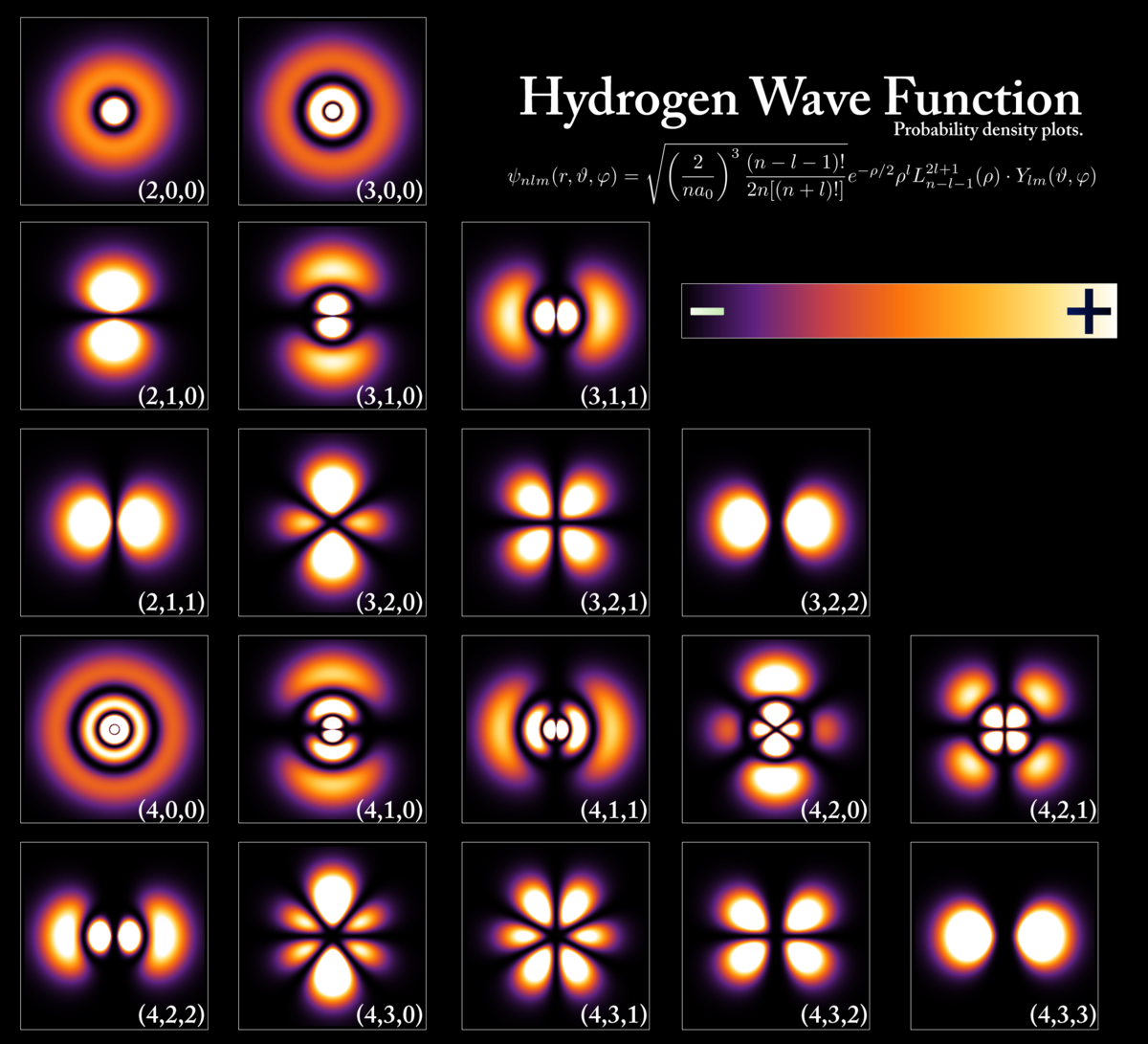

We have pioneered the development of TiML to solve diverse real-world problems. For example, our ontology-induced model of medical risk discovered risk factors that were more certain and consistent with established medicine than those found by purely data-driven methods. Our epidemiology-guided neural network model of COVID-19 dynamics had both its design and parameters informed by theory and past outbreaks. This approach greatly outperformed standard data-driven machine learning and mechanistic models, helping Ho Chi Minh City, home to over 10 million people, make critical decisions to mitigate a devastating late 2021 COVID crisis that took over 20,000 lives. Likewise, our physics-informed graph neural network integrates the external potential term found in density functional theory calculations to predict the potential energy surface in materials science applications. Our model is more accurate in predicting the total energy per atom of a defective system, as well as the structural changes that result from the presence of a defect in a material. Another TiML work of ours develops a crystal generative model that exploits the symmetry of crystal groups and incorporates an expert-guided reward function. This results in much faster and more stable crystal generation compared to competing data-driven methods that do not respect these theoretical priors.

However, TiML tends to be instance-specific and demands extensive domain and ML expertise to implement in practice. Despite ultimate success, our experience with COVID-19 modeling shows that TiML models are very hard to train, have an extremely complex loss landscape, suffer from instability, and require major efforts in data conditioning and hyperparameter tuning. To realize the global potential for TiML to problem-solve across diverse real-world instances and domains, key obstacles around model expressiveness and adaptability must be overcome.

This project addresses critical gaps in current TiML, which struggles to adapt, capture complexity, and generalize across diverse problem instances, spatiotemporal domains, and datasets.

- AI4Science: A research program, Talk @Deakin, Nov 2024.

- Generative AI to accelerate discovery of materials, Keynote @PRICM11, Nov 2023.

- AI for automated materials discovery via learning to represent, predict, generate and explain, @Thuyloi University, May 2023.

- Machine learning and reasoning for drug discovery Tutorial @ECML-PKDD, Sept 2021.

- Climate

change: Challenges and AI-driven solutions, @Swinburne Vietnam, Hanoi,

Vietnam, Dec 2019.

- Modern AI for drug discovery, VietAI Summit, Nov 2019.

- Lecture on Deep learning for biomedicine, Southeast Asia Machine Learning (SEA ML) School, Depok, Greater Jakarta, Indonesia, July 2019.

- Deep learning for genomics: Present and future, Genomic Medicine 2019, Hanoi, Vietnam, June 2019.

- AI for matters, Phenikaa University, Hanoi, Vietnam, Jan 2019.

- Deep learning for biomedicine: Genomics and Drug design, Institute of Big Data, Hanoi, Vietnam, Jan 2019.

- The

dynamics of knowledge, Truyen

Tran, Medium,

October 2024.

- AI, math, medicine, software, and the sciences: A shifting landscape, Truyen Tran, Medium, August 2024.

- Analysis and forecasting of COVID-19 in Ho Chi Minh city. Tran, T., Tran, Q., & Tech4Covid Group. Report to HCM City Council & COVID Response Task Force, 13/08/2021.

- NVIDIA's Modulus

- Materials Project

- Dat Ho (PhD, with A/Prof Shannon Ryan), PIML for breakup mechanics, 2024-.

- Linh La (PhD, with Dr Sherif Abbas), Physics-informed ML for materials, 2024-.

- Minh-Thang Nguyen (PhD), Knowledge-guided machine learning, 2023-.

- Tri Nguyen (PhD, with Dr Thin Nguyen), Decoding the drug-target interaction mechanism using deep learning, 2019-2022. Nominee of Deakin's Thesis Award 2022.

- Kien Do (PhD), Novel deep architectures for representation learning, 2017-2020.

- Trang Pham (PhD), Recurrent neural networks for structured data, 2016-2019.

- MP-PINN: A Multi-Phase Physics-Informed Neural Network for epidemic forecasting, Thang Nguyen, Dung Nguyen, Kha Pham and Truyen Tran, in Proceedings of the 22nd Australasian Data Science and Machine Learning Conference (AusDM'24), Melbourne, Australia | 25-27 November 2024.

- Embedding material graphs using the electron-ion potential: Application to material fracture, Tawfik, Sherif Abdulkader, Tri Minh Nguyen, Salvy P. Russo, Truyen Tran, Sunil Gupta, and Svetha Venkatesh. Digital Discovery, Oct 2024.

- Scale matters: simulation of nanoscopic dendrite initiation in the lithium solid electrolyte interphase using a machine learning potential, Tawfik, Sherif Abdulkader, Linh La, Tri Nguyen, Truyen Tran, Sunil Gupta, and Svetha Venkatesh, ChemRxiv. 2024; doi:10.26434/chemrxiv-2024-86s6m.

- Efficient symmetry-aware materials generation via hierarchical generative flow networks, Tri Minh Nguyen, Sherif Abdulkader Tawfik, Truyen Tran, Sunil Gupta, Santu Rana, Svetha Venkatesh, arXiv preprint, arXiv.2411.04323.

- PINNs for medical image analysis: A survey, C Banerjee, K Nguyen, O Salvado, T Tran, C Fookes, arXiv preprint, arXiv:2408.01026.

- Towards understanding structure–property relations in materials with interpretable deep learning, Tien-Sinh Vu, Minh-Quyet Ha, Duong Nguyen Nguyen, Viet-Cuong Nguyen, Yukihiro Abe, Truyen Tran, Huan Tran, Hiori Kino, Takashi Miyake, Koji Tsuda, Hieu-Chi Dam, npj Computational Materials, 9(215), (2023).

- Hierarchical GFlowNet for crystal structure generation, Nguyen, Tri, Sherif Tawfik, Truyen Tran, Sunil Gupta, Santu Rana, and Svetha Venkatesh. In AI for Accelerated Materials Design-NeurIPS 2023 Workshop. 2023.

- Machine learning-aided exploration of ultrahard materials, Tawfik, Sherif Abdulkader, Phuoc Nguyen, Truyen Tran, Tiffany R. Walsh, and Svetha Venkatesh. The Journal of Physical Chemistry C 126, no. 37 (2022): 15952-15961.

- Learning to discover medicines, Nguyen, Minh-Tri, Thin Nguyen, and Truyen Tran. International Journal of Data Science and Analytics (2022): 1-16.

- Mitigating cold-start problems in drug-target affinity prediction with interaction knowledge transferring, Nguyen, Tri Minh, Thin Nguyen, and Truyen Tran. Briefings in Bioinformatics 23, no. 4 (2022): bbac269.

- Explaining black box drug target prediction through model agnostic counterfactual samples, Nguyen, Tri Minh, Thomas P. Quinn, Thin Nguyen, and Truyen Tran. IEEE/ACM Transactions on Computational Biology and Bioinformatics (2022).

- GEFA: Early fusion approach in drug-target affinity prediction, Tri Minh Nguyen, Thin Nguyen, Thao Minh Le, Truyen Tran, IEEE/ACM Transactions on Computational Biology and Bioinformatics, 2021.

- Personalized Annotation-based Networks (PAN) for the prediction of breast cancer relapse, T Nguyen, SC Lee, TP Quinn, B Truong, X Li, T Tran, S Venkatesh, TD Le, IEEE/ACM Transactions on Computational Biology and Bioinformatics, 2021.

- Deep in the bowel: Highly interpretable neural encoder-decoder networks predict gut metabolites from gut microbiome, V Le, TP Quinn, T Tran, S Venkatesh, BMC Genomics (21), 07/2020.

- DeepTRIAGE: Interpretable and individualised biomarker scores using attention mechanism for the classification of breast cancer sub-types. A Beykikhoshk, TP Quinn, SC Lee, T Tran, S Venkatesh, BMC Medical Genomics, 2020.

- Incomplete conditional density estimation for fast materials discovery, Phuoc Nguyen, Truyen Tran, Sunil Gupta, Svetha Venkatesh. SDM'19.

- Committee machine that votes for similarity between materials; Duong-Nguyen Nguyen, Tien-Lam Pham, Viet-Cuong Nguyen, Tuan-Dung Ho, Truyen Tran, Keisuke Takahashi and Hieu-Chi Dam. IUCrJ, 2018 Nov 1; 5(Pt 6): 830–840.

- Graph transformation policy network for chemical reaction prediction, Kien Do, Truyen Tran, Svetha Venkatesh, KDD'19.

- Attentional multilabel learning over graphs: A message passing approach, K Do, T Tran, T Nguyen, S Venkatesh, Machine Learning, 2019.

- Hybrid generative-discriminative models for inverse materials design, Phuoc Nguyen, Truyen Tran, Sunil Gupta, Svetha Venkatesh. arXiv preprint arXiv:1811.06060, 2019

- Knowledge Graph Embedding with Multiple Relation Projections, Kien Do, Truyen Tran, Svetha Venkatesh, ICPR'18.

- Graph memory networks for molecular activity prediction, Trang Pham, Truyen Tran, Svetha Venkatesh, ICPR'18.

- Column Networks for Collective Classification, Trang Pham, Truyen Tran, Dinh Phung, Svetha Venkatesh, AAAI'17

- Graph classification via deep learning with virtual nodes Trang Pham, Truyen Tran, Hoa Dam, Svetha Venkatesh, Third Representation Learning for Graphs Workshop (ReLiG 2017).

- Stabilizing Linear Prediction Models using Autoencoder, Shivapratap Gopakumara, Truyen Tran, Dinh Phung, Svetha Venkatesh, International Conference on Advanced Data Mining and Applications (ADMA 2016).

- Neural Choice by Elimination via Highway Networks, Truyen Tran, Dinh Phung and Svetha Venkatesh, PAKDD workshop on Biologically Inspired Techniques for Data Mining (BDM'16), April 19-22 2016, Auckland, NZ.

- Graph-induced restricted Boltzmann machines for document modeling, Tu Dinh Nguyen, Truyen Tran, Dinh Phung, and Svetha Venkatesh, Information Sciences, 2016.

- Stabilizing Sparse Cox Model using Statistic and Semantic Structures in Electronic Medical Records. Shivapratap Gopakumar, Tu Dinh Nguyen, Truyen Tran, Dinh Phung, and Svetha Venkatesh, PAKDD'15, HCM City, Vietnam, May 2015.

- Tensor-variate Restricted Boltzmann Machines, Tu Dinh Nguyen, Truyen Tran, Dinh Phung, and Svetha Venkatesh, AAAI 2015.

- Stabilizing high-dimensional prediction models using feature graphs, Shivapratap Gopakumar, Truyen Tran, Tu Dinh Nguyen, Dinh Phung, and Svetha Venkatesh, IEEE Journal of Biomedical and Health Informatics, 2014 DOI:10.1109/JBHI.2014.2353031S

- Stabilized sparse ordinal regression for medical risk stratification, Truyen Tran, Dinh Phung, Wei Luo, and Svetha Venkatesh, Knowledge and Information Systems, 2014, DOI: 10.1007/s10115-014-0740-4.

- Thurstonian Boltzmann machines: Learning from multiple inequalities, Truyen Tran, Dinh Phung, and Svetha Venkatesh, In Proc. of 30th International Conference in Machine Learning (ICML’13), Atlanta, USA, June, 2013.

- A Sequential Decision Approach to Ordinal Preferences in Recommender Systems, Truyen Tran, Dinh Phung, Svetha Venkatesh, in Proc. of 25-th Conference on Artificial Intelligence (AAAI-12), Toronto, Canada, July 2012.

- Karniadakis, G. E., Kevrekidis, I. G., Lu, L., Perdikaris, P., Wang, S., & Yang, L. (2021). Physics-informed machine learning. Nature Reviews Physics, 3(6), 422-440.

- Nguyen, T. M., Tawfik, S. A., Tran, T., Gupta, S., Rana, S., & Venkatesh, S. (2023). Hierarchical GFlownet for Crystal Structure Generation. In AI for Accelerated Materials Design-NeurIPS 2023 Workshop (pp. 1-15).

- Hao, Z., Liu, S., Zhang, Y., Ying, C., Feng, Y., Su, H., & Zhu, J. (2022). Physics-informed machine learning: A survey on problems, methods and applications. Preprint.

- Kovachki, N. B., Lanthaler, S., & Stuart, A. M. (2024). Operator Learning: Algorithms and Analysis. Preprint.

- Lu,

L., Meng, X., Mao, Z., & Karniadakis, G. E. (2021). DeepXDE: A deep

learning library for solving differential equations. SIAM review, 63(1), 208-228.

- Takeishi, N., & Kalousis, A. (2021). Physics-integrated variational autoencoders for robust and interpretable generative modeling. Advances in Neural Information Processing Systems, 34, 14809-14821

- Raissi, M., Perdikaris, P., & Karniadakis, G. E. (2019). Physics-informed neural networks: A deep learning framework for solving forward and inverse problems involving nonlinear partial differential equations. Journal of Computational physics, 378, 686-707.

- Tran, T., Phung, D., & Venkatesh, S. (2013, May). Thurstonian Boltzmann machines: learning from multiple inequalities. In International Conference on Machine Learning (pp. 46-54). PMLR.